Your Definition of Done Is a Political Document, Not a Quality Standard

Somewhere in your company's Confluence instance, there's a page called "Definition of Done." Last edited fourteen months ago. Eight to fifteen bullet points. At least three reference Jira. Every one designed to be verifiable by someone who doesn't understand what the software actually does.

That's not a quality standard. It's a treaty—a liability shield negotiated between engineering, product, and management that says: when things go wrong, here's how we prove it wasn't our fault.

Your Definition of Done didn't prevent the last production incident. But it shaped the postmortem conversation about whose fault it was.

The Anatomy of a Political Document

Let's do something most Agile coaches won't. Take a standard Definition of Done and translate each item from what it says to what it means in practice.

| What the DoD Says | What Actually Happens |

|---|---|

| Code reviewed by at least one peer | Someone clicked "Approve" in the merge request UI. They may have skimmed the diff while eating lunch. The review took between 90 seconds and 4 minutes. Nobody ran the branch locally. |

| Unit tests written and passing | The developer wrote a test covering the happy path they already knew worked. Coverage went up. The edge case that causes a production incident in three weeks remains untested—which is the entire point of testing, but never mind. |

| Acceptance criteria met | The story was demo'd to the Product Owner, who squinted at a screen for thirty seconds, said "looks good," and moved on because they have forty-seven more stories to review before sprint review tomorrow. |

| Documentation updated | A README was touched, or a Confluence page was created that nobody will read. It describes what was built, not why, and will be outdated within two sprints. |

| No critical or high-severity bugs | The bugs were reclassified as "medium" so the story could close. Or they were split into separate tickets and added to the backlog, where tickets go to die. |

| Deployed to staging | Deployed to a staging environment that doesn't resemble production. Stale data, zero load, three dependent services running different versions. |

Every item is optimized for one thing: can we check a box in Jira?

Not: will this survive contact with real users? Not: would we bet our weekend on this not paging us at 3am?

Just: can we mark this story Done so the velocity chart goes up?

That's paperwork, not quality engineering.

Scenario 1: The Review That Wasn't

Thursday, 4:47 PM. Sprint ends tomorrow. Marcus has a pull request—1,200 lines across fourteen files. Six days of work. It touches the payment processing pipeline.

He tags Priya for review. Priya is mid-flight on her own story, which also needs to close by tomorrow. She opens the MR. Sees 1,200 lines. Scrolls. Reads three function signatures. Spots a minor style issue, leaves a comment to prove she looked, clicks Approve.

Six minutes.

The DoD item "Code reviewed by at least one peer" is satisfied.

Two weeks later, a race condition in Marcus's payment code double-charges 340 customers. The postmortem pulls up the MR. Priya's approval is right there, timestamped. Someone asks why the review didn't catch it.

Priya says she reviewed it. She did. According to the process, she reviewed it. She clicked the button. The box was checked.

The DoD didn't prevent the incident. It created a paper trail that distributed blame. That's what it was designed to do.

Why Nobody Fixes It

Everyone already knows the DoD is theater.

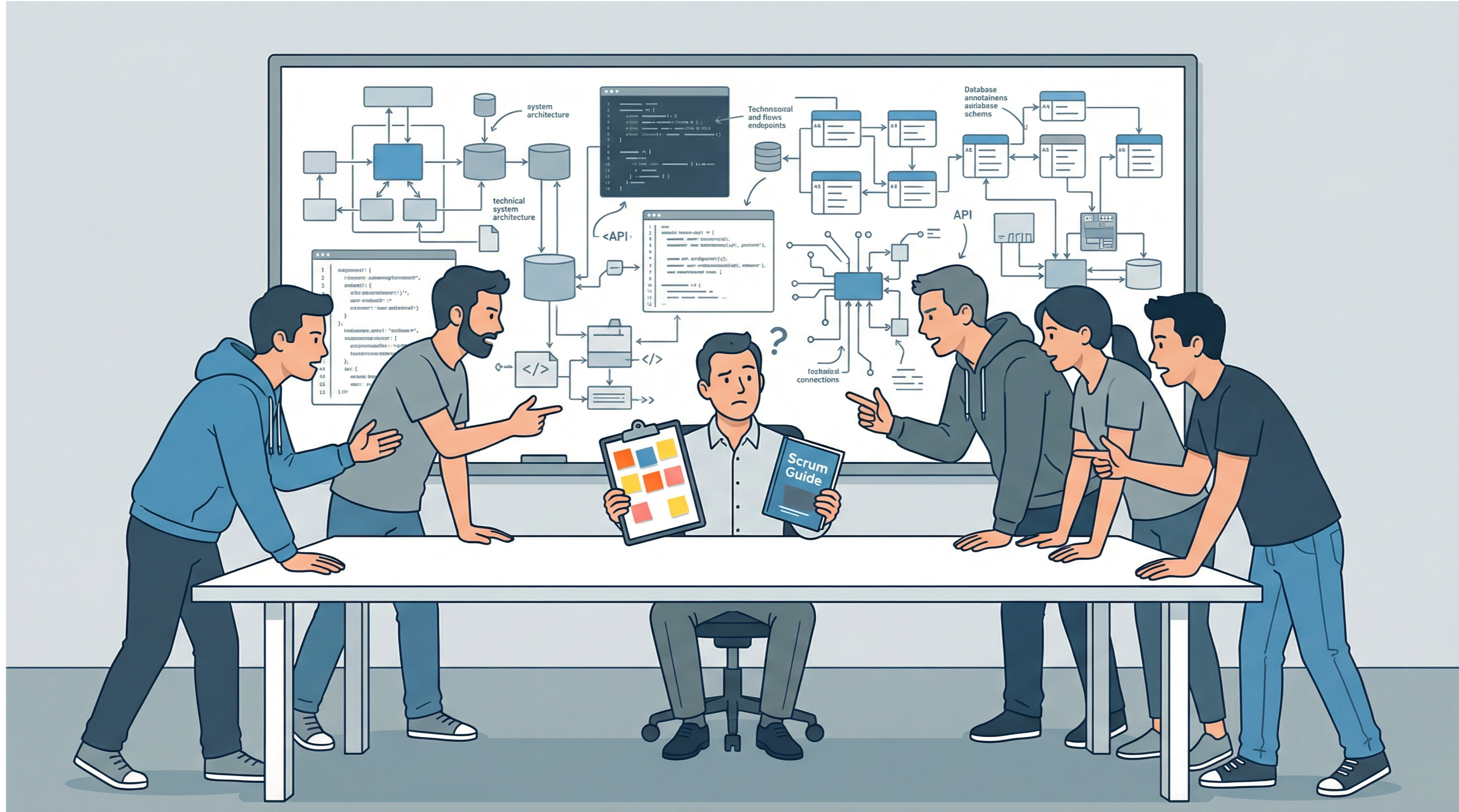

Developers know their reviews are superficial. QA knows staging doesn't mirror production. The Product Owner knows they're rubber-stamping acceptance criteria. The Scrum Master knows the whole thing is a checkbox exercise.

So why doesn't anyone fix it?

Because fixing it would require making the DoD actually rigorous. And rigorous means stories take longer, velocity drops, sprint commitments shrink, the roadmap slips, and someone walks into a room and tells a VP the team will deliver less.

That's the conversation nobody wants to have.

A stricter DoD doesn't just mean higher quality—it means lower throughput. In most organizations, throughput is what gets measured, rewarded, and presented in steering committees. Nobody got promoted for saying "we delivered fewer features but each one was production-hardened."

So the DoD stays weak. Not because the team is lazy. Because organizational incentives demand it. A rigorous DoD threatens the velocity narrative, and the velocity narrative is what keeps management comfortable.

The DoD exists at exactly the level of rigor that allows the current delivery pace to continue while providing just enough paper trail to survive an audit or a postmortem.

It's a negotiated settlement, not a quality standard.

Scenario 2: The DoD Negotiation

New quarter. New engineering director. She comes from a company that had serious reliability problems, and she wants to tighten things up. She proposes:

- Load testing required for any endpoint handling > 100 req/s

- Integration test suite must pass in a production-like environment

- Security review for any changes to authentication or authorization logic

- Mandatory 24-hour bake period in canary deployment before full rollout

Reasonable. These are things that actually prevent incidents.

The proposal hits sprint planning. Within fifteen minutes:

"If we add load testing, half our stories won't close in a single sprint."

"We don't have a production-like staging environment. Block all stories until infra builds one?"

"The security team has a two-week review queue. Our cycle time would explode."

"We promised stakeholders the checkout redesign by March. This pushes it to May."

The Product Owner looks alarmed. The Scrum Master starts talking about "pragmatic approaches." Someone suggests making the new items "aspirational guidelines" instead of mandatory.

Within a month, the new items are quietly dropped or made optional. The DoD returns to its previous state: a list of things verifiable by looking at Jira metadata.

The engineering director learns what every engineering director learns: the DoD is not yours to unilaterally strengthen. It's a political agreement. Changing it requires changing the incentive structure, the delivery expectations, and the management culture. You can't just edit a wiki page.

Risk Management Theater

The DoD is a symptom. The larger disease is what I call Risk Management Theater—performing risk mitigation optimized for appearing rigorous rather than being effective.

It shows up everywhere:

- Sprint reviews where stakeholders nod at demos instead of interrogating them

- Retrospectives that produce action items nobody follows up on

- Story point estimation that pretends uncertainty compresses into a Fibonacci number

- "Done Done" — the embarrassing term teams invent when they realize "Done" doesn't mean done

- Hardening sprints — an entire sprint fixing what the DoD was supposed to prevent

Every one of these rituals exists because the organization needs evidence that quality is being managed without actually investing in quality. Ceremonies produce artifacts. Artifacts produce audit trails. Audit trails produce plausible deniability.

The DoD is the purest expression of this pattern. A written document defining what "quality" means—almost universally inadequate for that purpose.

If your DoD is satisfied and your software still regularly causes production incidents, it's not a quality standard. It's a liability shield.

Scenario 3: A DoD That Actually Worked

This is the part where I'm supposed to be purely cynical, but honesty demands otherwise. I've seen a Definition of Done work. Once.

Small company. Twelve engineers. B2B SaaS. They'd had a brutal six months—three major customer-facing outages, two lost contracts, a front-page Hacker News mention for all the wrong reasons.

Their CTO, out of desperation more than philosophy, threw out the existing DoD and replaced it with a single rule:

"A story is Done when the engineer who built it would deploy it to every customer at 4:55 PM on a Friday without telling anyone, and sleep soundly."

No bullet points. No Jira workflow gates. One subjective, unverifiable, deeply personal standard.

It worked. Not because the rule was magic, but because of what came with it:

-

The team got explicit permission to slow down. The CTO told the board delivery would drop roughly 30% for two quarters. She framed it as customer retention. The board, staring at two lost contracts, agreed.

-

Velocity was removed as a metric. Not deprioritized—removed. From dashboards, sprint reviews, quarterly reports. The only surviving delivery metric was customer-reported incidents per month.

-

Peer review became adversarial by design. Not hostile—adversarial. Reviewers were told their job was to find reasons the code shouldn't ship. Reviews took longer. They were vastly more useful.

-

The DoD was a conversation, not a checklist. Every sprint review asked: "Would you Friday-deploy this?" The team answered honestly, in front of each other. Social pressure did what checkboxes never could.

Customer incidents dropped around 80% in two quarters. No additional lost contracts. Two enterprise prospects cited reliability as a reason they signed.

But notice what it took: existential pressure, executive air cover, removal of velocity metrics, and willingness to cut delivery expectations by a third. Most organizations won't pay that price. So most DoDs remain negotiated fictions.

Auditing Your DoD for Political Theater

Want to know whether your DoD is a quality standard or a political document? A diagnostic.

The Friday Deploy Test

For each item in your DoD, ask: "If this item is satisfied, does it meaningfully increase my confidence that this code won't cause a production incident?"

Not "does it prove someone did something." Does it increase confidence in production readiness? If the answer is no—if the item is about process evidence rather than production confidence—mark it as political.

The Last Incident Autopsy

Pull up your last three production incidents. For each:

- Was the DoD satisfied on the story that caused the incident?

- If yes, which DoD item should have caught it but didn't?

- If every item was satisfied and none would have caught it, your DoD isn't protecting you. It's protecting someone's career.

The Timing Test

Measure how long each DoD item actually takes to satisfy. If your code review averages under ten minutes for a 500+ line change, it's not a review. It's a rubber stamp.

If your testing item is satisfied with tests that took less time to write than the code they cover, that's coverage theater.

The Rigor Conversation

Propose adding one genuinely rigorous item:

- "All error paths tested with failure injection"

- "Performance tested under 2x expected peak load"

- "Reviewed by someone with no context on the feature"

Observe the reaction. If the primary objection is velocity impact, you've confirmed your DoD is calibrated to delivery speed, not quality.

The Uncomfortable Question

Ask your team anonymously: "When was the last time a story was blocked from Done because it genuinely failed the Definition of Done?"

If nobody can remember a specific example, your DoD is not a gate. It's a rubber stamp with extra steps.

What an Honest DoD Would Look Like

Strip away the theater and write a DoD genuinely about production readiness. It would look nothing like what most teams have.

Shorter. Three to five items, not fifteen. Every item carries real weight.

Subjective in places. "The engineer is confident this won't cause a customer-facing issue" is a better gate than "unit test coverage exceeds 80%." You can game a coverage number. You can't easily game looking your teammates in the eye.

Enforceable with consequences. If the DoD is violated and an incident occurs, there's a real retrospective about why the DoD failed, not just the code.

Uncomfortable for management. A real DoD slows delivery. If it doesn't, it's not rigorous enough. If nobody in management has ever pushed back on the DoD for being too strict, it has never been strict enough to matter.

Reviewed after every incident. Not annually. Not quarterly. After every incident—because every incident is evidence the DoD is insufficient.

A brutally honest DoD:

- The change has been tested in an environment that mirrors production topology, data volume, and traffic patterns.

- At least one reviewer has explicitly attempted to break the implementation and documented their attempts.

- The engineer who wrote the code can articulate, without notes, what happens if every external dependency this code touches is unavailable.

- There is a documented rollback plan that has been tested.

- The person deploying this would do it at 4:55 PM on Friday without notifying anyone.

That's a DoD that might actually prevent incidents. It's also one most organizations will never adopt, because it would cut velocity significantly and force a very uncomfortable conversation about what the business actually values.

The Root Cause

Most Definitions of Done are political documents not because teams are incompetent or Scrum Masters are negligent. It's because the Agile-industrial complex built a framework where visible throughput is the primary success metric, then told teams to also care about quality.

You can't optimize for both without tradeoffs. Without hard conversations. Without someone telling a stakeholder that the thing they want in March isn't coming until May because the team is going to build it right.

The DoD is where that tension collapses into theater. The document where the organization writes down its quality aspirations while structurally ensuring they can never be met.

Stop editing the document. Start changing the system that made it necessary.

The Definition of Done is one artifact of a much larger dysfunction. If you want the full pattern—how the Agile-industrial complex turned engineering discipline into process theater—read AGILE: The Cancer of the Software Industry→. It's not a comfortable read. It's not supposed to be.