Stop Forcing AI Development Into Agile, It`s Killing Your Projects

You're in sprint planning. The product owner asks: "How many story points for training the sentiment analysis model?"

Silence.

Someone mumbles "five." Another engineer counters with "eight." Nobody knows. You're not building a login form. You're exploring whether transfer learning from BERT will even work for your domain-specific text. You might discover in three days that the entire approach is wrong.

The planning poker cards come out anyway.

This is where Agile dies—and takes your AI project with it.

The Predictability Fallacy

Agile emerged from web development in the early 2000s. Break work into predictable increments. Deliver consistently. Improve velocity over time.

That works when you know what you're building.

AI development doesn't work that way. You're conducting experiments. Half fail. The other half reveal your original hypothesis was wrong, sending you in a completely different direction.

Research on ZDNet found that AI practitioners report traditional Agile practices like sprint planning become "sources of friction" rather than enablers of productivity.

The fundamental conflict: Agile demands predictability. AI development is inherently unpredictable.

You can't estimate how long it takes to achieve specific model accuracy. You can't promise feature engineering will improve your F1 score by Tuesday. You can't commit to a production-ready recommender system by end of Q2.

You can only commit to learning.

The Two-Week Fiction

The sprint is Agile's atomic unit. Two weeks to deliver working software.

Now imagine this: Week one, you set up your training pipeline. Week two, you discover your data has a catastrophic label imbalance nobody noticed during collection.

Sprint deliverable: A documented failure and a pivot to collecting more data.

By Agile metrics, you delivered zero story points. By research metrics, you made critical progress—you eliminated a path that wouldn't work and identified the real blocker.

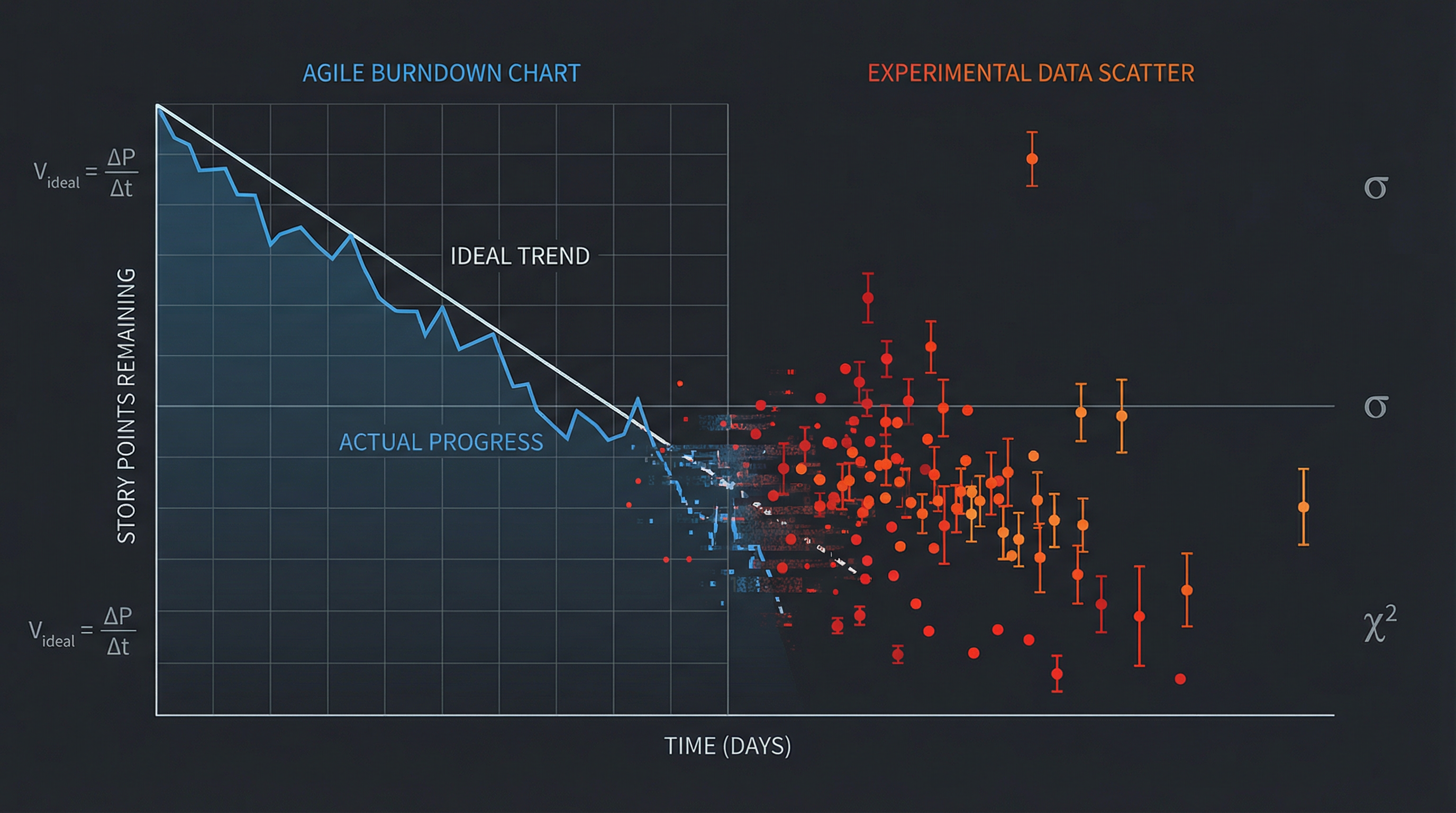

Try explaining that to a Scrum Master holding a burndown chart.

The worst part? Teams start gaming the system. They pad estimates. They break "research spikes" into fake deliverables. They commit to what they can measure rather than what matters.

They stop doing good science to satisfy a methodology designed for e-commerce checkouts.

Beyond User Stories

"As a user, I want the model to achieve 95% accuracy so that I can trust the predictions."

This user story is nonsense. I've seen dozens like it in real backlogs.

User stories assume deterministic features. Click button, receive output. The format works beautifully for CRUD applications.

It falls apart for AI because AI development isn't feature delivery—it's hypothesis testing.

You don't build an accurate model the way you build a responsive navbar. You form hypotheses. Design experiments. Collect data. Analyze results. Iterate.

Sometimes you discover accuracy is the wrong metric. Sometimes you learn the business problem can't be solved with available data. Sometimes a simple heuristic outperforms your fancy neural network.

These are research outcomes, not user stories.

The Experiment Log vs. The Sprint Backlog

I once joined an AI team that had abandoned sprint backlogs entirely. Instead, they maintained an experiment log.

Each entry contained:

- A hypothesis ("Augmenting training data with synthetic examples will improve model recall on edge cases")

- The experiment design

- Success criteria (actual metrics, not story points)

- Results and learnings

- Next steps

No story points. No velocity. No burndown charts. Just rigorous science.

They shipped a production model in four months. The previous team, running two-week sprints with story point estimation, had spent eight months delivering nothing but PowerPoint decks.

The difference? They stopped pretending AI development was predictable.

Velocity Metrics: Measuring the Wrong Things

Velocity is Agile's favorite vanity metric. Points per sprint. Completed stories per iteration. Burnup charts trending toward done.

It's garbage for AI work.

Velocity assumes consistent progress. Points in sprint N predict points in sprint N+1. Steady output equals steady value.

None of this holds.

You might spend three sprints achieving modest accuracy improvements, then have a breakthrough in sprint four that solves the entire problem. Or maintain "velocity" while pursuing a dead-end approach that gets scrapped.

Practitioners on Reddit consistently report that traditional Agile metrics "don't capture the experimental nature of AI work" and often incentivize the wrong behaviors.

The Story Point Theater

Here's what actually happens when you force story points onto AI work:

The team learns to estimate conservatively. Every task becomes an 8 or a 13. Nobody wants to explain why "tune hyperparameters" took three days instead of one when you discover your learning rate was causing gradient explosion.

Or they slice work into meaningless increments. "Preprocess data" becomes five separate stories: load data, handle missing values, normalize features, encode categoricals, split train/test.

You've gamified your backlog while learning nothing about whether your model will work.

Story points measure throughput, not discovery. AI development is about discovery.

Every hour estimating story points for AI research is an hour stolen from actual research.

What to Measure Instead

Track these instead:

- Experiments completed

- Model performance metrics (precision, recall, F1, AUC, business-specific KPIs)

- Data quality improvements

- Time from hypothesis to validated results

- Production model impact on business outcomes

Get the weekly breakdown

What the Agile industry sells vs what actually works. Data, war stories, no certification required. Free, unsubscribe anytime.

None fit on a burndown chart. All matter more than velocity.

Measure experimental throughput: hypotheses tested per month, not points per sprint. Measure learning velocity: how quickly does the team eliminate dead ends and converge on working approaches?

Measure model performance against business metrics. Does the recommender increase conversion? Does the classifier reduce manual review time? Does the forecasting model improve inventory decisions?

Case Studies: AI Teams That Abandoned Agile and Thrived

Three teams. Three different approaches. Zero sprints.

Team A: The Research Lab Model

A fraud detection team at a fintech company ditched sprints entirely. Each engineer owned an area of investigation. One focused on feature engineering. Another explored different model architectures. A third worked on inference optimization.

They met twice a week for 90 minutes. Not for stand-ups—for journal clubs. Each meeting, someone presented experimental results. The team discussed implications, challenged assumptions, proposed follow-up experiments.

No sprint planning. No story points. No retrospectives about process. Just rigorous peer review.

Outcome: They reduced false positives by 40% while catching 15% more fraud in six months. The previous Agile-managed team had spent nine months arguing about whether data pipeline work should count toward velocity.

Team B: The Hypothesis-Driven Approach

An ML team building a recommendation engine replaced their sprint cycle with hypothesis cycles. Each cycle lasted as long as it took to test a meaningful hypothesis—sometimes a week, sometimes four weeks. No artificial two-week boundaries.

They tracked experiments in a simple spreadsheet:

- Hypothesis

- Expected outcome

- Actual outcome

- Confidence level

- Next hypothesis

Stakeholders received updates when hypotheses were validated or rejected, not on arbitrary sprint boundaries.

The team reported significantly lower stress. More importantly, they shipped a production recommender that increased click-through rates by 22%.

Team C: The Dual-Track System

One team recognized that AI projects have two distinct types of work: research and engineering.

Research work (model development, experimentation, data exploration) ran on hypothesis-driven cycles with no story points.

Engineering work (API development, infrastructure, monitoring, deployment) ran on lightweight Agile with weekly planning.

Different work, different processes.

They explicitly separated "we're exploring" work from "we're building" work. Research phases had no delivery commitments beyond learning goals. Engineering phases had clear deliverables and timelines.

The result: Research proceeded without artificial deadlines killing good science. Engineering delivered reliable infrastructure without getting dragged into model development uncertainty. Stakeholders knew which phase the team was in.

A New Framework: Research-First Development for AI

If Agile doesn't work, what does?

Here's a framework I've seen work repeatedly. It's not novel—it's borrowed from how research labs operate. It just requires admitting that AI development is applied research, not software construction.

Phase 1: Problem Validation (Don't Skip This)

Before writing any code:

- Define the business problem in measurable terms

- Identify minimum viable model performance needed for business value

- Assess data availability and quality

- Determine if ML is even the right approach (sometimes it isn't)

Time: 1-2 weeks

Deliverable: A problem spec that answers "What does success look like?" and "Is this feasible?"

No story points. No sprint commitment. Just honest assessment.

Phase 2: Exploratory Research

Now you explore. Pure research phase.

Activities:

- Data exploration and quality assessment

- Baseline model development

- Feature engineering experiments

- Architecture exploration

- Hypothesis generation and testing

Time: As long as it takes to find a viable approach or determine one doesn't exist. Typically 4-12 weeks.

Cadence: Weekly research reviews where the team presents findings, discusses blockers, proposes next experiments.

Deliverable: A validated approach that meets minimum performance requirements, OR a clear recommendation that the problem can't be solved with available data/resources.

This phase looks nothing like Agile. No sprints. No velocity metrics. No "commitment" beyond rigorous investigation.

Stakeholders receive weekly updates on learnings, not completed stories.

Phase 3: Engineering for Production

Once you have a working model, now you engineer.

This phase CAN use Agile-like practices if your team finds them useful:

- Weekly planning

- Clear deliverables (API endpoint, monitoring dashboard, A/B test framework)

- Predictable scope

Why? Because you're building deterministic systems now, not conducting experiments.

Activities:

- Model optimization

- API development

- Deployment pipeline

- Monitoring and alerting

- Documentation

Time: 2-6 weeks

Deliverable: Production-ready ML system

Phase 4: Continuous Improvement

After launch, you return to research mode.

Monitor model performance. Generate hypotheses about improvements. Run experiments. Iterate.

This is not maintenance. It's a new research cycle.

Practical Checklist: Transitioning Your AI Team Away from Agile

If you're drowning in dysfunctional Agile practices, here's your escape route:

Week 1:

- Stop estimating story points for research work immediately

- Cancel sprint planning for AI research tasks

- Create an experiment log to replace your sprint backlog

Week 2:

- Reframe work in terms of hypotheses, not user stories

- Identify your current phase: exploring or engineering?

- Set up weekly research reviews instead of daily stand-ups

Week 3-4:

- Define business success metrics for your model (not velocity metrics)

- Communicate the new approach to stakeholders

- Archive your story point history—it's worse than useless

Month 2:

- Evaluate if your Scrum Master role makes sense (probably doesn't for research work)

- Consider separate tracks for research vs. engineering work

- Measure experimental throughput: hypotheses tested, learnings documented, model improvements

Month 3:

- Assess team morale and productivity—you should see improvement

- Document what's working in your research-first approach

- Prepare to defend your choices when someone tries to "fix" your lack of velocity metrics

Talking to Stakeholders and Leadership

The hardest part isn't changing your process. It's managing up.

Executives love Agile because it creates the illusion of predictability. Burndown charts look scientific. Velocity metrics feel measurable.

Replace that comfort with something better: honesty.

Here's the script:

"We're treating AI development as research, not construction. You'll receive weekly updates on experimental results and learnings. We'll tell you when we've validated an approach that works, or when we've determined an approach won't work. We won't commit to artificial deadlines that compromise scientific rigor. We'll get you to production faster by not pretending this work is predictable."

Some executives will hate this. Those are the ones who prefer comforting lies to uncomfortable truth.

The good ones will appreciate the honesty and focus on outcomes instead of theater.

The Uncomfortable Truth

Here's what nobody wants to admit: Agile succeeded not because it's the best development methodology, but because it gave management a sense of control.

Story points, velocity, burndown charts—these artifacts exist primarily to soothe executive anxiety. They create the appearance of measurable progress.

For web development, the cost of this theater is tolerable. You lose some efficiency, but the work is predictable enough that Agile doesn't destroy it.

For AI development, the cost is catastrophic.

When you force experimental work into a framework designed for predictable construction, you get the worst of both worlds: the overhead of Agile ceremonies without any benefits of predictability.

Teams spend hours in planning meetings estimating work they can't estimate. Researchers game velocity metrics instead of pursuing good science. Projects look healthy on dashboards while producing nothing of value.

The solution isn't to tweak Agile. It's not "Agile for AI" or "AI-adapted Scrum."

It's recognizing that AI development is research, applying research methodologies, and abandoning the pretense that you can sprint your way to a trained model.

Your next planning poker meeting is optional.

Your next experiment is not.

I've spent twenty five years watching engineers try to fix broken processes. The ones who succeeded had data, allies, and patience. The ones who failed had opinions, frustration, and a retro slot.

Most of them were trapped in what I call Risk Management Theater and didn't even know

Is your organization performing control or practicing it?

The RMT Diagnostic is a 1-page PDF with 10 signs your team is trapped in Risk Management Theater. Takes 60 seconds. No certification required.

No spam. No "Agile tips." Just the diagnostic. Unsubscribe anytime.